Sorry, but your login has failed. Please recheck your login information and resubmit. If your subscription has expired, renew here.

March-April 2024

Part of any supply chain manager’s job is risk mitigation. Thanks to COVID-19 and the ensuing, and constant, disruptions that have followed, more companies are now focused on reducing their exposure to supply chain chaos. We’ve heard a lot about diversification in recent years—having multiple suppliers in multiple locations. But risk mitigation goes far beyond diversification, and the recent case of Boeing should serve as a cautionary tale not to avoid those other risks. Browse this issue archive.Need Help? Contact customer service 847-559-7581 More options

Of the numerous AI applications in supply chain management, supplier selection, risk resilience, and contract negotiation are often cited as offering the most potential for generative AI. The need for high volumes of text and data makes these areas particularly suitable for such applications. However, many organizations struggle to overcome the complexities of integrating generative AI into mainstream operations, one reason that projects often fail.

A pilot project at the MIT Center for Transportation & Logistics (MIT CTL) aims to overcome these complexities and demonstrate how targeted, real-world applications of generative AI can be successfully implemented in the procurement function. The project team is developing a chatbot for a leading pharmaceutical company with a direct and indirect annual spend of over $35 billion. The bot will help category managers negotiate more effectively with suppliers by providing comprehensive information on key questions like how prices are trending for specific materials.

Addressing data issues

Category managers rely on a multitude of data sources, including spend analyses, bills of materials (BOM), and other industry-specific information, to devise effective negotiation strategies. However, existing sources of this data are often underutilized because they are not easy to access or are relatively limited. For example, ERP systems represent a key source, but the number of systems and their complexity can be problematic for users. Also, current methods for data retrieval, such as visualization dashboards, often require extensive navigation and a steep learning curve for newcomers. Category managers can turn to data scientists for ad-hoc analyses. Still, these specialized staff may be in short supply, and relying on them too much creates bottlenecks that impede the information-gathering process.

With these difficulties in mind, the pilot’s goal is to augment (increase efficiency) and automate (reduce time) the collection and collation of information that category managers need when negotiating with suppliers. While the necessity of data scientists would not be reduced, generative AI could “place a data scientist in the pockets” of every procurement professional in this application area. As a result, the technology would increase managers’ efficiency, democratize data access, and foster data-driven decisions in supplier negotiations. These benefits could be realized regardless of the category managers’ technical prowess or experience level, allowing procurement professionals to focus more on strategic aspects of their jobs.

Choosing the best approach

A defining feature of generative AI is its ability to provide coherent and contextually relevant responses and flexible phrasing options that allow questions to be framed differently. For example, the technology can translate free-form text into SQL or another data-querying language to extract information, thus extending the scope of inquiries beyond pre-defined questions. This capability differentiates the technology from traditional chatbots based on fixed question-and-answer pairs. Furthermore, generative AI can empower users to perform complex tasks like generating graphs and conducting statistical analyses without requiring a coding background. When integrated with more sophisticated models, these tools can even undertake advanced tasks such as predictive and prescriptive analytics, showcasing their versatility and depth in creating new insights from the available data.

Given these capabilities, it is vitally important that the new chatbot is designed to respond to the types of questions the pharmaceutical company’s category managers typically ask in preparation for negotiations with suppliers.

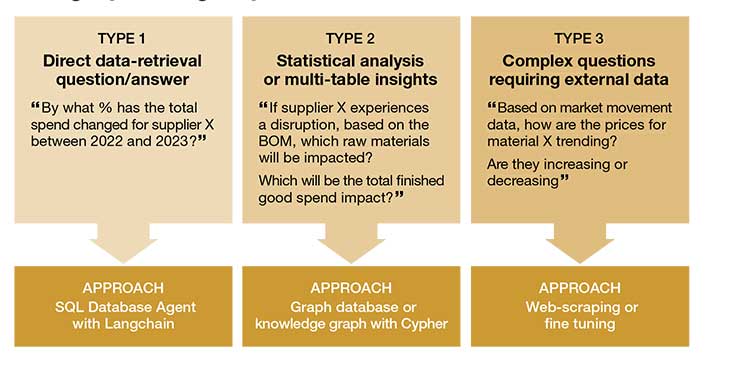

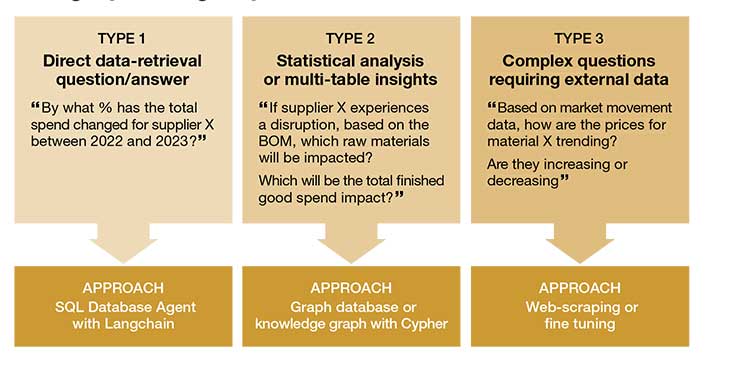

The insights the company’s procurers look for vary in complexity. We initially categorized these questions into separate use cases and organized them into three buckets. This categorization is crucial as each question type can benefit from different models (see Figure 1).

A retrieval-augmented-generation (RAG) approach was employed with the company’s actual data. The RAG approach involves retrieving data from a knowledge database relevant to a question and providing it as context to large language models (LLMs) that generate a response. The model was deployed using AzureOpenAI’s LLM within a secure environment. The RAG method can be advantageous for reducing inaccuracies or “hallucinations” primarily because it prioritizes fetching information from the existing knowledge base, ensuring that the content is anchored to retrieved, reliable texts.

An important question for the project team is whether or not it is better to use an off-the-shelf AI solution to meet these various demands rather than developing a solution in-house. A bespoke chatbot can avoid the time and cost of developing a tailored solution. However, the team opted for an in-house solution for several reasons.

Generative AI is not protected against the well-established “garbage in, garbage out” or GIGO effect, which underscores the importance of data quality and modeling. The complexity of the models and data architecture significantly impacts the resulting output. Hence, the benefit of designing a tailored model while still using a proprietary LLM is the increased flexibility this affords data scientists to swiftly iterate, test various models, and deploy solutions in secure, private environments. Another advantage of the in-house option is that it can be tailored to specific organizational needs while keeping costs and the need for computationally skilled persons manageable. Furthermore, given the experimental nature of this field, piloting a proof of concept first can help foster trust from relevant stakeholders and make it easier to secure investment funds.

The project’s “low-hanging fruit” was to address Type 1 questions first (see Figure 1). Preliminary iterations utilized a LangChain SQL Database agent (LangChain is a Python library that offers tailored LLM applications that can be deployed for different tasks using different agents). Much higher accuracy was achieved when using careful prompt engineering (designing the input to produce an optimal output), such as formatting the free-form text intentionally to replicate query language.

This approach poses two critical questions: how can the likelihood of user error be accounted for, and how can category managers be protected from potentially inaccurate information when questions are posed ambiguously?’

Figure 1: Category manager questions

Source: Authors

One strategy is to encourage category managers to learn and embrace pseudocode—a mix of plain language and coding syntax that explains how a program should work—without using actual programming language. Even without a technical background, using words like filter and aggregate or breaking down complex queries into smaller sentences first can greatly increase the tool’s accuracy.

Another approach focuses on refining the user interface. Implementing a dropdown menu can guide users away from entering free-form text where this type of input is not ideal for the model. A help area that clarifies users’ objectives before inputting free-form text can be included. In addition, an instruction-tuning facility at the back end of the application can guide the agent in answering a specific category of questions in a purposely directed way.

Next steps

Following a review of the preliminary chatbot version by the sponsor company’s CPO, the plan is to refine the model and develop a comprehensive roadmap to scale it further. A particularly promising avenue involves using a graph database or knowledge graph instead of a relational database to establish connections between BOMs and spend data, unlocking more profound insights geared toward addressing more complex questions. This refinement represents a significant opportunity to enhance the procurement organization’s analytical capabilities.

We also intend to research and develop a roadmap to facilitate the full-scale deployment of a fully accessible chatbot. This will involve outlining the roles and responsibilities of the functional groups involved in this process.

Significant challenges must be overcome before the chatbot becomes integral to the company’s procurement operations. These include data quality and access issues, API permissions for security, difficulties of latency and accuracy when using relational databases for the LLM, and the reliability of deployed applications.

However, the chatbot has the potential to deliver substantial benefits. Also, the project could set the stage for steady, transformative progress in advanced AI and establish a new benchmark for efficiency and innovation in procurement.

SC

MR

Sorry, but your login has failed. Please recheck your login information and resubmit. If your subscription has expired, renew here.

March-April 2024

Part of any supply chain manager’s job is risk mitigation. Thanks to COVID-19 and the ensuing, and constant, disruptions that have followed, more companies are now focused on reducing their exposure to supply chain… Browse this issue archive. Access your online digital edition. Download a PDF file of the March-April 2024 issue.Of the numerous AI applications in supply chain management, supplier selection, risk resilience, and contract negotiation are often cited as offering the most potential for generative AI. The need for high volumes of text and data makes these areas particularly suitable for such applications. However, many organizations struggle to overcome the complexities of integrating generative AI into mainstream operations, one reason that projects often fail.

A pilot project at the MIT Center for Transportation & Logistics (MIT CTL) aims to overcome these complexities and demonstrate how targeted, real-world applications of generative AI can be successfully implemented in the procurement function. The project team is developing a chatbot for a leading pharmaceutical company with a direct and indirect annual spend of over $35 billion. The bot will help category managers negotiate more effectively with suppliers by providing comprehensive information on key questions like how prices are trending for specific materials.

Addressing data issues

Category managers rely on a multitude of data sources, including spend analyses, bills of materials (BOM), and other industry-specific information, to devise effective negotiation strategies. However, existing sources of this data are often underutilized because they are not easy to access or are relatively limited. For example, ERP systems represent a key source, but the number of systems and their complexity can be problematic for users. Also, current methods for data retrieval, such as visualization dashboards, often require extensive navigation and a steep learning curve for newcomers. Category managers can turn to data scientists for ad-hoc analyses. Still, these specialized staff may be in short supply, and relying on them too much creates bottlenecks that impede the information-gathering process.

With these difficulties in mind, the pilot’s goal is to augment (increase efficiency) and automate (reduce time) the collection and collation of information that category managers need when negotiating with suppliers. While the necessity of data scientists would not be reduced, generative AI could “place a data scientist in the pockets” of every procurement professional in this application area. As a result, the technology would increase managers’ efficiency, democratize data access, and foster data-driven decisions in supplier negotiations. These benefits could be realized regardless of the category managers’ technical prowess or experience level, allowing procurement professionals to focus more on strategic aspects of their jobs.

Choosing the best approach

A defining feature of generative AI is its ability to provide coherent and contextually relevant responses and flexible phrasing options that allow questions to be framed differently. For example, the technology can translate free-form text into SQL or another data-querying language to extract information, thus extending the scope of inquiries beyond pre-defined questions. This capability differentiates the technology from traditional chatbots based on fixed question-and-answer pairs. Furthermore, generative AI can empower users to perform complex tasks like generating graphs and conducting statistical analyses without requiring a coding background. When integrated with more sophisticated models, these tools can even undertake advanced tasks such as predictive and prescriptive analytics, showcasing their versatility and depth in creating new insights from the available data.

Given these capabilities, it is vitally important that the new chatbot is designed to respond to the types of questions the pharmaceutical company’s category managers typically ask in preparation for negotiations with suppliers.

The insights the company’s procurers look for vary in complexity. We initially categorized these questions into separate use cases and organized them into three buckets. This categorization is crucial as each question type can benefit from different models (see Figure 1).

A retrieval-augmented-generation (RAG) approach was employed with the company’s actual data. The RAG approach involves retrieving data from a knowledge database relevant to a question and providing it as context to large language models (LLMs) that generate a response. The model was deployed using AzureOpenAI’s LLM within a secure environment. The RAG method can be advantageous for reducing inaccuracies or “hallucinations” primarily because it prioritizes fetching information from the existing knowledge base, ensuring that the content is anchored to retrieved, reliable texts.

An important question for the project team is whether or not it is better to use an off-the-shelf AI solution to meet these various demands rather than developing a solution in-house. A bespoke chatbot can avoid the time and cost of developing a tailored solution. However, the team opted for an in-house solution for several reasons.

Generative AI is not protected against the well-established “garbage in, garbage out” or GIGO effect, which underscores the importance of data quality and modeling. The complexity of the models and data architecture significantly impacts the resulting output. Hence, the benefit of designing a tailored model while still using a proprietary LLM is the increased flexibility this affords data scientists to swiftly iterate, test various models, and deploy solutions in secure, private environments. Another advantage of the in-house option is that it can be tailored to specific organizational needs while keeping costs and the need for computationally skilled persons manageable. Furthermore, given the experimental nature of this field, piloting a proof of concept first can help foster trust from relevant stakeholders and make it easier to secure investment funds.

The project’s “low-hanging fruit” was to address Type 1 questions first (see Figure 1). Preliminary iterations utilized a LangChain SQL Database agent (LangChain is a Python library that offers tailored LLM applications that can be deployed for different tasks using different agents). Much higher accuracy was achieved when using careful prompt engineering (designing the input to produce an optimal output), such as formatting the free-form text intentionally to replicate query language.

This approach poses two critical questions: how can the likelihood of user error be accounted for, and how can category managers be protected from potentially inaccurate information when questions are posed ambiguously?’

Figure 1: Category manager questions

Source: Authors

One strategy is to encourage category managers to learn and embrace pseudocode—a mix of plain language and coding syntax that explains how a program should work—without using actual programming language. Even without a technical background, using words like filter and aggregate or breaking down complex queries into smaller sentences first can greatly increase the tool’s accuracy.

Another approach focuses on refining the user interface. Implementing a dropdown menu can guide users away from entering free-form text where this type of input is not ideal for the model. A help area that clarifies users’ objectives before inputting free-form text can be included. In addition, an instruction-tuning facility at the back end of the application can guide the agent in answering a specific category of questions in a purposely directed way.

Next steps

Following a review of the preliminary chatbot version by the sponsor company’s CPO, the plan is to refine the model and develop a comprehensive roadmap to scale it further. A particularly promising avenue involves using a graph database or knowledge graph instead of a relational database to establish connections between BOMs and spend data, unlocking more profound insights geared toward addressing more complex questions. This refinement represents a significant opportunity to enhance the procurement organization’s analytical capabilities.

We also intend to research and develop a roadmap to facilitate the full-scale deployment of a fully accessible chatbot. This will involve outlining the roles and responsibilities of the functional groups involved in this process.

Significant challenges must be overcome before the chatbot becomes integral to the company’s procurement operations. These include data quality and access issues, API permissions for security, difficulties of latency and accuracy when using relational databases for the LLM, and the reliability of deployed applications.

However, the chatbot has the potential to deliver substantial benefits. Also, the project could set the stage for steady, transformative progress in advanced AI and establish a new benchmark for efficiency and innovation in procurement.

SC

MR

Latest Supply Chain News

- MIT CTL offering humanitarian logistics course

- Bridging the ESG gap in supply chain management: From ambition to action

- Few executives believe their supply chains can respond quickly to disruptions

- Technology’s role in mending supply chain fragility after recent disruptions

- Tech investments bring revenue increases, survey finds

- More News

Latest Podcast

Explore

Explore

Topics

Software & Technology News

- Technology’s role in mending supply chain fragility after recent disruptions

- Tech investments bring revenue increases, survey finds

- Survey reveals strategies for addressing supply chain, logistics labor shortages

- AI, virtual reality is bringing experiential learning into the modern age

- Humanoid robots’ place in an intralogistics smart robot strategy

- Tips for CIOs to overcome technology talent acquisition troubles

- More Software & Technology

Latest Software & Technology Resources

Subscribe

Supply Chain Management Review delivers the best industry content.

Editors’ Picks